Why It’s Troubling That Artificial Intelligence Created This Cover (with Human Prompts)

The era of AI art — digital paintings, drawings and photographs created by artificial intelligence — is upon us. Who could have imagined a time when an attractive, vibrant piece of “original” art could be created simply by typing a few words into a computer? And at what cost?

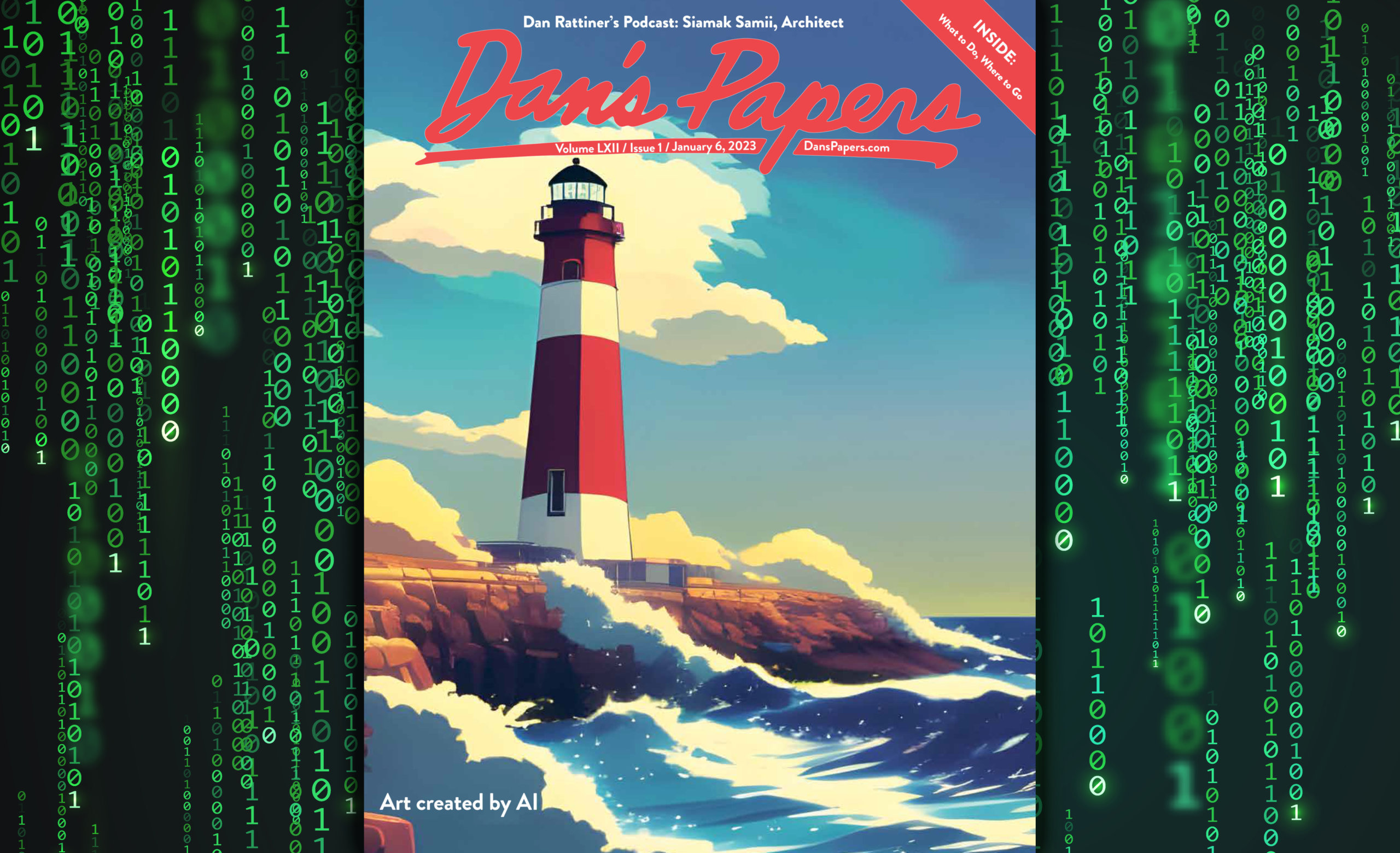

This week’s Dan’s Papers cover art, featuring a version of the Montauk Lighthouse, was made entirely by AI, but it feels in large part like many other covers we’ve published over the last 35 years.

Starting with Elaine de Kooning’s painting on our September 11, 1987 issue, hundreds of artists have since enjoyed the pride and recognition that comes with displaying their work on our cover, but in a world where computers can now produce art based on a few keystrokes inputted by a human, what does that mean for the artists who once spent hours, days and weeks toiling in their studios to create similar images?

While Dan’s has never had to pay the artists who make our covers, it’s clear to see how this growing trend could provide a company in need of artwork and illustrations with an easy way to circumvent the hiring of trained professionals in order to save money and time by simply playing around with a few word combinations to get something that’s “good enough.”

Artists all over the world are not happy about any of this. Some could argue these artists are just whining about the effects of nascent technology on their business, but the issue is more complicated than that. The moral and ethical implications are quite real, and their rage feels justified.

Learning how AI art is made is a key part of understanding the controversy currently swirling around its use.

Without getting into a lot of technical jargon, the user experience works like this, at least for me creating this Montauk Lighthouse cover image: I logged on to NightCafe Studio (nightcafe.studio), hit the “Create” button and selected one of five creation methods, each using a different algorithm.

I selected the latest generator, called the “Stable” text-to-image AI art algorithm, designed to create coherent pictures that follow the laws of physics. From there, I typed “Montauk Lighthouse,” chose the “Anime” style — one of 28 available style options — and then hit “Create.” Less than a minute later, I had a slightly different version of the image that now graces the cover of this newspaper, at the cost of half a credit (from the initial 5 credits NightCafe gives users for signing up).

After some consultation, we decided the waves should be larger since this is supposed to be Montauk in winter, so I clicked “Evolve This Creation” and added “large waves” to the original prompt, leaving me with the final image you see on our cover.

What happens on the computer side is of far greater import. When the AI gets a prompt, it digs into a massive data set, pulling from billions of images online, and assembles those images based on the inputs to create an acceptable final result.

The problem with this comes down to the images the AI uses. Among these images are billions of people’s personal photographs, copyrighted artwork and every other piece of visual data spread across the internet — and they’re used without permission.

Some of the most popular AI programs, like the ones that create those artistic avatars popping up all over social media, even offer filters based on specific artists’ work. It’s not a surprise that artists, who are showing direct comparisons of their work against strikingly similar AI creations, feel concerned and violated.

Popular YouTuber Sam Yang, of Sam Does Arts, explains all this quite succinctly in a video called Why Artists Are Fed Up with AI Art.

“If you’ve shared your work online, or even images of yourself, your house, your environment, you might have been included in these data sets,” Yang points out, adding, “The moment we share them online, we’ve opted in to the system. If your work so much as exists online, it will be found, and it will be used in a piece of AI art.”

Sooner or later, I imagine the millions of AI-generated pieces will also end up incorporated into these data sets, and AI will begin basing its art on other AI art, further laundering content away from its original source and leaving us with a trove of watered-down work that will all begin to look the same.

In spite of all this, I have to admit, creating AI art is a lot of fun. I’ve quite enjoyed playing with various long, convoluted text prompts and evolving images based on added text, but I’m careful not to call this my own art, and I wouldn’t attempt to sell it as such or submit it to a contest, like Jason Allen did with his AI-generated piece, “Théâtre D’opéra Spatial,” which earned first place in the Colorado State Fair’s Digital Art category last fall and helped bring criticism of, and conversation about, AI art to the fore.

There is, of course, a place for using AI, but it may take some time to find the best way forward, where it’s helping the art world, not harming the livelihood of individual artists.

Within its own bubble, a site like NightCafe Studio offers amusing, themed contests and gives users credits for submitting work, voting for others in daily challenges and achieving milestones, such as getting your first piece to land in the top 20% with voters.

But it would be a bit delusional for so-called “AI artists” to take full credit for the things they create using these tools.

Oliver Peterson’s actual art creations, made with his own two hands, and an occasional computer, can be found at @oliverpetersonart or @oliversees on Instagram. He’s also quite worried about AI journalists taking his job.